Abstract

Visual impairments are a global health issue with profound socio economic ramifications in both the developing and the developed world. There exist ongoing research projects, that aim to investigate the influence of light in the perception of low vision individuals. But as of today, there is neither clear knowledge nor extensive data regarding the influence of light in low vision situations. This research address these issues by introducing a methodology and a system to simulate visual impairments. A pipeline based on eye anatomy coupled with real time image processing algorithms allows to dynamically simulate low vision specific characteristics of selected impairments in mixed reality. An original new approach based on massively parallelized processing combined with an efficient modeling of eye refractive errors aims to improve the accuracy of the low vision simulation.

Reference

Valeria Acevedo, Philippe Colantoni, Eric Dinet and Alain Trémeau, « Real-time Low Vision Simulation in Mixed Reality, » in 2022 16th International Conference on Signal-Image Technology & Internet-Based Systems (SITIS), Dijon, France, 2022 pp. 354-361.

doi: 10.1109/SITIS57111.2022.00060

Objective

Build a tool that will be able to simulate the most common visual impairments allowing:

- to be customizable in a per patient basis.

- to be as realistic as possible

- to be realtime

- to mix different impairment submodels

Proposed solution

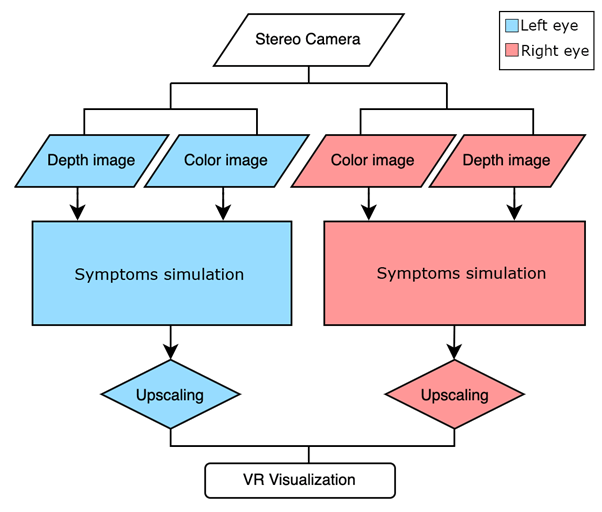

A dual-pipeline, a new system that allows the video acquisition from both sensors in a stereo camera. Allowing the user, in this manner, to experience a see-through mixed reality system which will decrease the user’s visual abilities in a controlled environment and to specific degrees as needed, in a per eye- basis .

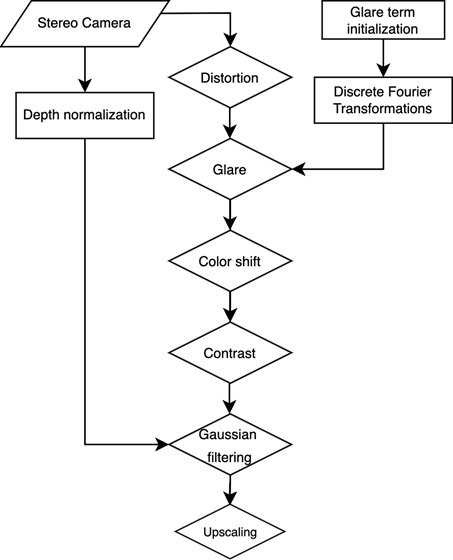

Per symptom pipeline

To design the pipeline, a comprehensive analysis was conducted to assess the impact of each impairment on the final image visualization.

Every symptom but the glare was implemented in the GPU using CUDA, in this way speeding up the processing. However this process was accelerated using OpenMP, in this way making use of the CPU computing power to achieve higher speeds.

System

The camera to be used will be the Zed Mini, a stereo camera designed for mixed reality for its low latency video passthrough.

| 1920×1080 | 30 fps | Stereo Passthrough |

Results

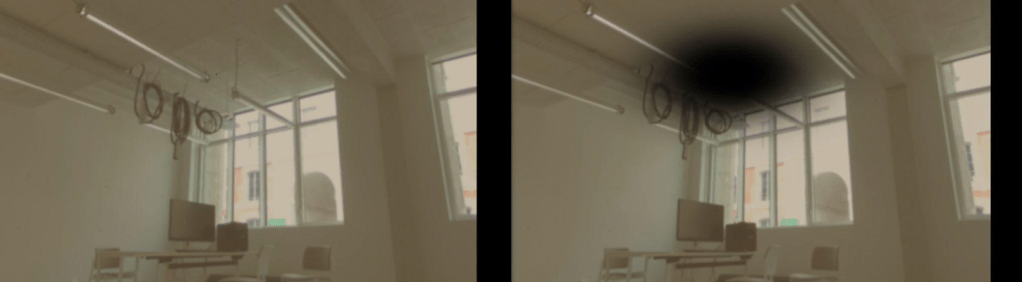

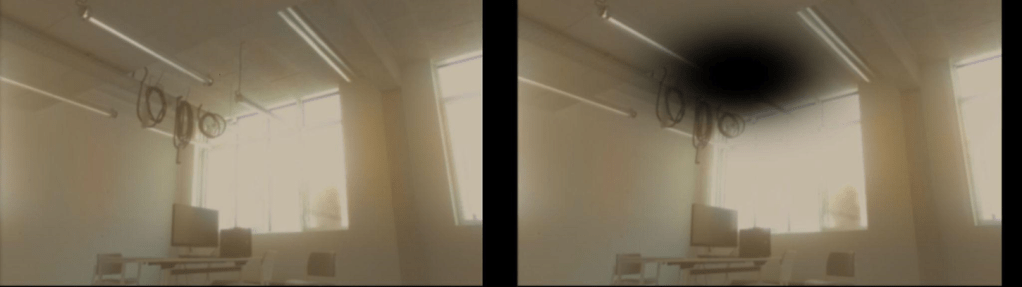

Depth Perception

Farsightedness

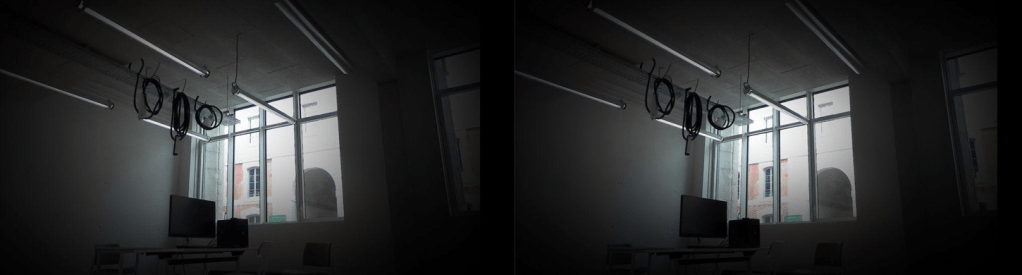

Tunnel vision

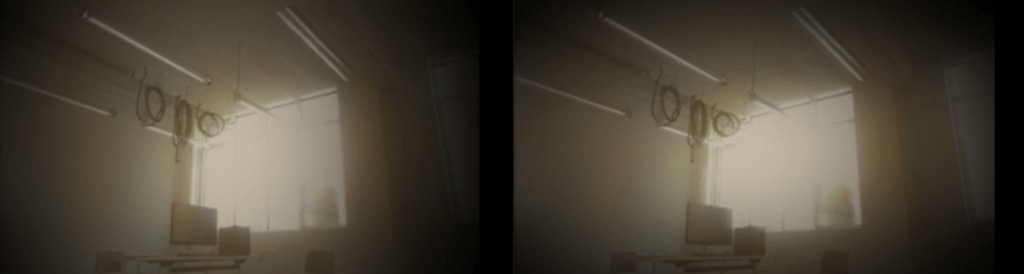

Examples of multiple symptoms

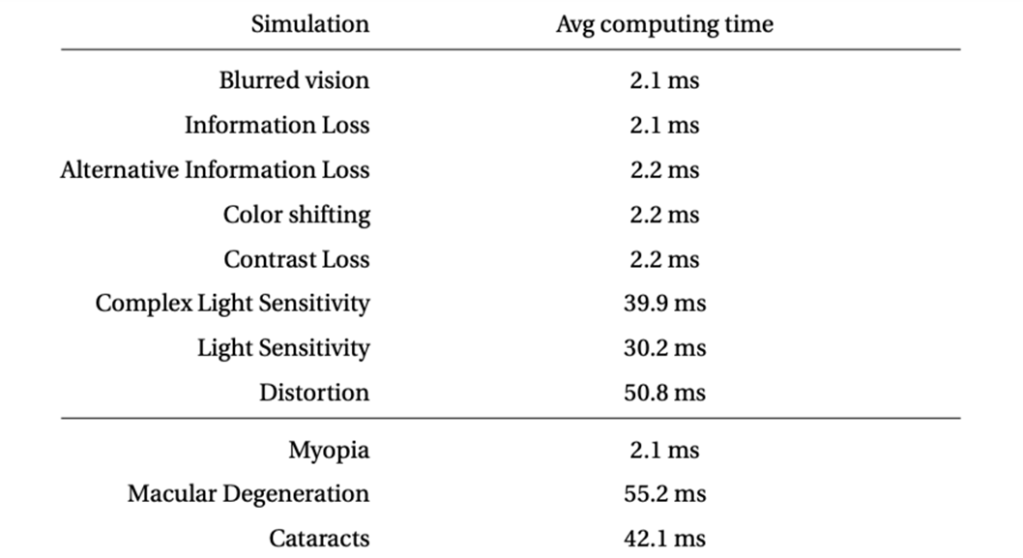

Benchmarks